Training a model with hundreds of billions of parameters isn’t just hard-it’s impossible on a single GPU. Even the most powerful graphics cards today, like the NVIDIA H100 with 80GB of memory, can’t hold a full copy of GPT-3, let alone something larger like Claude 3 or Llama 3. So how do companies train these models at all? The answer lies in splitting the model across many devices using model parallelism, and more specifically, pipeline parallelism.

Why Single GPUs Can’t Handle Large Models

A model like GPT-3 has 175 billion parameters. Each parameter is stored as a 32-bit floating-point number, meaning just the weights take up about 700GB of memory. Even if you use 16-bit precision (half-precision), you’re still looking at 350GB. That’s more than four times the memory of the largest consumer GPU. And that’s just the weights. You also need memory for gradients, optimizer states, and activations-the intermediate results computed during forward and backward passes. In practice, training GPT-3 requires over 320GB of memory per replica. No single GPU can do that. Data parallelism-the most common training method-won’t solve this. In data parallelism, every GPU holds a full copy of the model. You split up the input data, run the same model on each GPU, then average the gradients. It works great for smaller models, but if your model doesn’t fit on one GPU, data parallelism is useless. You can’t split the data if you can’t even fit the model. That’s where model parallelism comes in.What Is Model Parallelism?

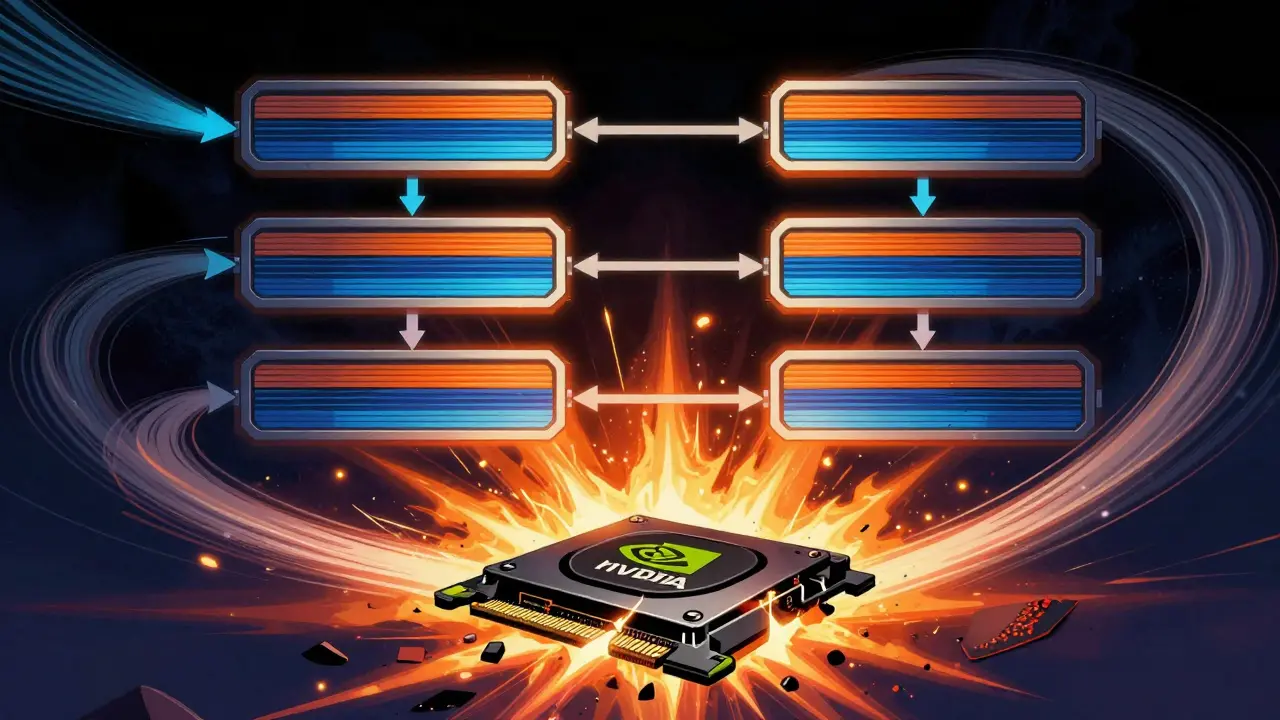

Model parallelism means splitting the model itself across multiple devices. Instead of every GPU running the whole model, each GPU runs only a part of it. Think of it like building a car: one team installs the engine, another the transmission, another the wheels. Each worker handles a piece, and the car moves from station to station. There are two main types of model parallelism: tensor parallelism and pipeline parallelism. Tensor parallelism splits individual layers-like a single attention head-across multiple GPUs. It’s powerful but creates massive communication overhead because every operation requires all GPUs to talk to each other constantly. Pipeline parallelism is simpler, more scalable, and more widely used.How Pipeline Parallelism Works

Pipeline parallelism splits the model by layers. Imagine a 48-layer transformer. You divide it into four chunks of 12 layers each. Each chunk gets assigned to a different GPU. During training:- The first GPU takes the input, runs its 12 layers, and sends the output (activations) to the second GPU.

- The second GPU runs its 12 layers and passes results to the third.

- The third passes to the fourth.

- The fourth computes the loss and sends gradients backward through the pipeline.

Micro-Batching: Fixing the Idle Problem

The solution? Micro-batching. Instead of sending one big batch through the pipeline, you split it into smaller pieces-say, 16 micro-batches. Now, as soon as the first micro-batch reaches GPU 2, GPU 1 can start processing the second micro-batch. The pipeline fills up like water flowing through pipes. According to the original GPipe paper (2019), micro-batching reduces bubble time from (P-1)F to (P-1)F/P, where P is the number of pipeline stages and F is the time to compute one forward pass. For an 8-stage pipeline, bubble time drops from 87.5% to just 12.5%. GPU utilization jumps from under 50% to over 90%. This is why every major system-Megatron-LM, ColossalAI, SageMaker-uses micro-batching by default. It’s not optional. It’s the difference between training taking days versus weeks.

Grouped vs. Interleaved Scheduling

There are two ways to schedule pipeline execution:- Grouped: All forward passes happen first, then all backward passes. This means fewer communication rounds but higher memory usage because you have to store all activations until the backward pass starts.

- Interleaved: Forward and backward happen alternately. This uses less memory but creates more communication overhead and can leave some GPUs idle while waiting for gradients.

Hybrid Parallelism: The Real-World Standard

No one uses pipeline parallelism alone. Real training jobs combine it with other methods. - Data parallelism splits the batch across multiple pipeline groups. So if you have 8 GPUs arranged in a 4-stage pipeline, you might run two copies of that pipeline in parallel. That’s 2-way data parallelism + 4-way pipeline parallelism = 8 GPUs total. - Tensor parallelism splits individual layers within each pipeline stage. NVIDIA’s Megatron-Turing NLG (530B parameters) used 8-way tensor parallelism within each of 128 pipeline stages-totaling 3,072 GPUs. This hybrid approach lets you scale to thousands of GPUs. You’re not just splitting the model-you’re splitting it in multiple dimensions at once. The result? Training models too big for any single company to have imagined just five years ago.Memory Savings and Scaling Efficiency

Pipeline parallelism cuts memory usage linearly. If your model needs 320GB and you split it across 8 GPUs, each GPU only needs about 40GB (ignoring communication overhead). That’s the math that makes training possible. But scaling efficiency-the measure of how much faster you get as you add more GPUs-isn’t perfect. Data parallelism can hit 95% efficiency. Pipeline parallelism? Around 75-85% for models over 100B parameters, according to NVIDIA’s Megatron-LM benchmarks. Why the drop? Communication. Even though pipeline parallelism sends data only between adjacent stages, the volume adds up. And if one stage is slower-say, because it has a heavy attention layer-it becomes a bottleneck. That’s why load balancing matters more than most people realize.

Real-World Challenges

Pipeline parallelism isn’t magic. It’s messy. - Load imbalance: One layer takes 2x longer than others? That stage becomes a bottleneck. Engineers spend weeks re-grouping layers to even out the load. - Activation checkpointing: Storing all intermediate activations eats memory. To save space, systems recompute them during backward pass. But recomputation slows training down. It’s a trade-off between memory and speed. - Debugging: A bug in a 128-stage pipeline? Good luck tracing it. One Meta AI engineer said debugging pipeline code takes 3x longer than data parallel code. - Training instability: Some teams report higher loss spikes and slower convergence with pipeline parallelism. Hyperparameters that work in data parallelism don’t always transfer. A 2023 survey of 127 AI engineers found 68% struggled with activation recomputation, and 42% saw increased training instability. It’s not for beginners.What’s New in 2025?

The field is moving fast. In 2023, NVIDIA introduced dynamic pipeline reconfiguration in Megatron-Core. You can now pause training, change the number of pipeline stages, and resume from a checkpoint-without restarting from scratch. That’s huge for long-running jobs. ColossalAI’s v0.3.8 introduced zero-bubble pipeline parallelism, overlapping communication with computation so much that idle time drops to near zero. Microsoft’s 2023 NeurIPS paper showed 95% scaling efficiency across 1,024 GPUs using asynchronous updates. And the trend is clear: hybrid parallelism is now the norm. 85% of training jobs over 50B parameters combine pipeline, data, and tensor parallelism. Even cloud platforms like AWS SageMaker and Google Vertex AI now offer one-click pipeline parallelism.Who Needs It?

If you’re training a model under 10 billion parameters, you probably don’t need pipeline parallelism. Data parallelism on 4-8 GPUs is enough. But once you cross 10B, things change. According to MLPerf’s Q4 2023 survey:- 18% of teams training models under 10B use pipeline parallelism.

- 92% of teams training models over 50B use it.

Final Thoughts

Pipeline parallelism is the quiet engine behind today’s biggest AI models. It doesn’t get the headlines that transformers or RLHF do. But without it, ChatGPT, Claude, and Gemini wouldn’t exist. It’s the reason we can train models with hundreds of billions of parameters on existing hardware. The trade-offs are real: complexity, debugging nightmares, tuning headaches. But the alternative-giving up on larger, smarter models-isn’t acceptable. As model sizes keep exploding and GPU memory lags behind, pipeline parallelism isn’t just useful. It’s essential.For engineers, the path is clear: learn distributed systems, understand PyTorch’s distributed backend, and master micro-batching. The future of AI isn’t just about better algorithms. It’s about better ways to train them.

Amanda Harkins

January 5, 2026 AT 00:20It’s wild how we’ve normalized training models that require more memory than most laptops have total storage. We’re basically building digital skyscrapers on sand.

And yet, nobody talks about the energy cost. It’s not just hardware-it’s electricity, cooling, carbon. We’re optimizing for speed, not survival.

Jeanie Watson

January 5, 2026 AT 06:31meh. i just want the chatbot to work. who cares how it’s built.

Tom Mikota

January 6, 2026 AT 09:30Okay, so let me get this straight-you’re telling me we spent $100M to train a model that needs 3,072 GPUs… and the real breakthrough was… splitting it into smaller chunks? And calling it ‘pipeline parallelism’ like it’s a new kind of yoga?

Also, ‘micro-batching’? That’s just… batching, but smaller. You didn’t invent math, you just renamed it with a startup buzzword.

Mark Tipton

January 8, 2026 AT 02:36Let’s be honest-this entire system is a house of cards built on speculative hardware investments and corporate vanity. The 85% scaling efficiency? That’s a fairy tale. Real-world bottlenecks are far worse than papers admit.

And let’s not forget: every single ‘hybrid parallelism’ system is secretly just a workaround for the fact that we’ve hit the end of Moore’s Law. We’re not advancing AI-we’re just shuffling data between GPUs like a magician distracting you with his left hand.

Meanwhile, the real innovation-efficient inference, quantization, distilled models-is ignored because it doesn’t require a $50M cluster. The industry is addicted to scale, not intelligence.

And yes, I’ve seen the internal Slack threads. The debugging nightmares? They’re worse than you think. One engineer at a major lab told me they once spent three weeks tracking a bug that turned out to be a single misplaced comma in a CUDA kernel.

And don’t even get me started on how training instability is swept under the rug because ‘it converges eventually.’ Eventually? We’re talking weeks of wall-clock time. That’s not engineering. That’s alchemy with a budget.

Adithya M

January 9, 2026 AT 14:45Actually, this is the only way forward. In India, we don’t have access to 1000 GPUs-but we do have smart engineers who can optimize pipelines. Micro-batching isn’t magic-it’s necessity.

And yes, debugging is hell. But if you’re serious about scaling, you learn to love the pain. I’ve written my own pipeline scheduler in PyTorch. It’s ugly, but it works. You want to train big models? Stop whining. Start coding.

Jessica McGirt

January 10, 2026 AT 12:34I appreciate how clearly you explained the trade-offs between grouped and interleaved scheduling. Most posts gloss over this, but the memory-throughput balance is critical.

Also, the point about load imbalance being underestimated? Spot on. I’ve seen teams waste months because they assumed layers were evenly distributed. Turns out, layer 37 was a monster attention block. No one noticed until training slowed to a crawl.

And activation checkpointing-it’s not just a memory hack. It’s a philosophical choice: do we value speed or sustainability? I’d argue we’re choosing speed because it’s easier to measure.

Jamie Roman

January 12, 2026 AT 05:47Man, I read this whole thing and I’m just sitting here thinking about how much I don’t know. I’ve used transformers in my projects, but I’ve never even touched distributed training. The idea of splitting a model across GPUs like an assembly line… it’s so elegant, but also terrifying.

I can’t even imagine trying to debug a 128-stage pipeline. One tiny error and the whole thing collapses. And the fact that you need to recompute activations just to save memory? That’s like burning fuel to save gas.

But honestly? I’m glad someone’s doing this. Because if we’re going to build models that understand context, emotion, nuance-we’re gonna need way more than just bigger datasets. We need smarter ways to train them. Even if it’s messy.

Also, micro-batching is genius. I feel like I should’ve thought of that. But then again, I’m just a guy who trains 1B models on a single RTX 4090. I’m lucky if my GPU doesn’t melt.

Salomi Cummingham

January 12, 2026 AT 14:49Oh my god, I just cried a little reading this. Not because it’s sad-because it’s beautiful. This is engineering poetry.

Think about it: we took something that seemed impossible-a model too big to fit-and turned it into a dance. Each GPU, a dancer. Each micro-batch, a step. The bubbles? The pauses between beats. And then-micro-batching-suddenly the rhythm flows. It’s not just efficient. It’s artistic.

And yes, it’s messy. Debugging is like untangling a thousand threads in the dark. But look what we’ve built: models that write poems, diagnose diseases, simulate galaxies.

None of it would exist without this quiet, ugly, brilliant system. Pipeline parallelism isn’t just a technique-it’s the unsung hero of the AI revolution.

So thank you. For writing this. For doing this. For not giving up.

Even when the GPUs are hot. Even when the gradients vanish. Even when your boss says ‘just make it faster.’

You kept going. And that? That’s what matters.