When AI tools rewrite your code, they don’t just change how it works-they can break how it stays secure. You might think if the app still runs, it’s fine. But that’s where the danger hides. In 2024, companies using AI to refactor code saw security regression testing become the difference between a quiet deployment and a $500,000 breach. This isn’t theory. It’s happening right now in production systems across finance, healthcare, and e-commerce.

Why AI Refactoring Breaks Security Without You Knowing

AI tools like GitHub Copilot and Amazon CodeWhisperer don’t understand security policies. They optimize for speed, readability, or style. That means they’ll happily remove an input validation check because it looks "redundant." Or replace a hardcoded secret with a dynamic lookup-without checking if the new method is properly secured. These aren’t bugs. They’re silent security regressions.Take authentication flows. A 2024 Snyk study found that 28% of AI-refactored code introduced improper access control. The app still logs users in. The UI looks right. But now, a regular user can access admin endpoints because the AI removed a single line of role-checking code. Traditional regression tests won’t catch it. They only check if the login button works-not whether the system enforces permissions.

Another common issue: cryptographic changes. AI often replaces deprecated libraries like MD5 or SHA-1 with newer ones. But it doesn’t always handle key derivation, salt generation, or IV management correctly. One team at a major bank found their AI-generated code switched from PBKDF2 to Argon2-but forgot to adjust the iteration count. The result? Password hashes were now crackable in under 48 hours.

How Security Regression Testing Is Different

Standard regression testing asks: "Does it still do what it used to?" Security regression testing asks: "Does it still keep people out the way it used to?"That means adding test cases that focus on:

- Access control: Can a user with role A access resource B after the refactor?

- Data validation: Are inputs still sanitized in all entry points?

- Session management: Are tokens invalidated properly? Are cookies marked HttpOnly?

- Configuration drift: Did the AI change environment variables, headers, or middleware order?

- API security: Are rate limits, authentication headers, and input schemas still enforced?

According to DX’s 2024 study, teams that added just 15% more security-focused test cases cut post-deployment security issues by 70%. That’s not a small win. That’s the difference between passing an audit and getting fined under GDPR or PCI-DSS.

Tools That Actually Work for AI-Generated Code

Not all SAST or DAST tools handle AI refactoring well. Most were built to scan human-written code. AI-generated code has different patterns-sometimes cleaner, sometimes weirder.Here’s what’s working in 2026:

- SonarQube 9.9+: Detects AI-specific security patterns like missing context-aware authorization checks. Its 2024 update improved detection of AI-introduced vulnerabilities by 35%.

- Semgrep: Lets you write custom rules for AI-generated code smells. For example: "Flag any function that calls jwt.sign() without verifying the audience claim."

- OWASP ZAP with AI plugins: Now includes behavioral analysis to spot changes in session handling or CSRF token validation.

- Checkmarx and Synopsys Seeker: Both now have AI-refactoring impact analysis. They map which security-critical paths were touched and auto-generate test scenarios.

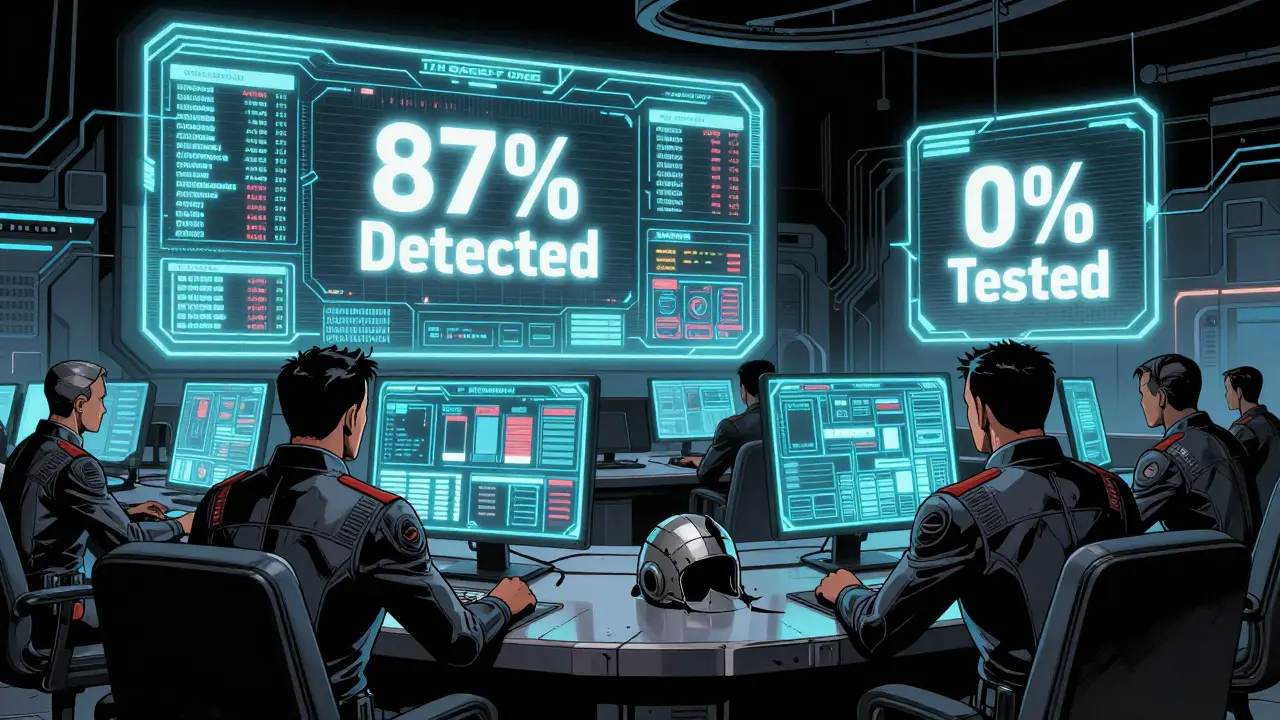

Veracode’s Q2 2024 report showed these tools catch 87% of AI-induced security flaws-compared to just 42% for generic scanners. The gap isn’t small. It’s life-or-death for regulated industries.

The Cost of Not Doing It

Some teams still think: "We’ll just run penetration tests after deployment." That’s like checking your locks after someone broke in.Synopsys analyzed 1,200 breaches tied to AI refactoring in 2023. The average cost? $147,000 per incident. That includes downtime, legal fees, customer notifications, and reputational damage. Capital One reduced PCI-DSS violations by 92% after implementing security regression gates. That’s not luck. That’s process.

And the penalties aren’t just financial. In healthcare, a single unpatched vulnerability in AI-refactored code can trigger HIPAA fines of up to $1.5 million per violation. In finance, regulators now require proof of security regression testing before approving AI-driven code changes.

How to Start-Without Overwhelming Your Team

You don’t need to rewrite your entire test suite. Start small. Here’s a realistic 3-phase plan:- Catalog security-critical code paths (2-3 weeks): Find the 10-20% of your codebase that handles auth, payments, PII, or encryption. Use code ownership tools or dependency maps. Don’t guess-know exactly what’s at risk.

- Add 15-20% more security test cases: Focus on OWASP Top 10 items most affected by AI refactoring: broken access control, security misconfigurations, and insecure design. Use existing test templates from OWASP’s AI Security Testing Guide v1.2 (released Oct 2024).

- Build a CI/CD gate (1-2 weeks): Block merges if security regression tests fail. Use GitHub Actions or GitLab CI to auto-run Semgrep and SonarQube on any PR tagged as "AI-refactor."

Teams that followed this approach saw test maintenance drop by 55% when using tools like Testim.io’s Smart Maintenance. It learns which tests break when AI changes code and auto-updates them.

What’s Coming Next

This field is evolving fast. By 2026, 85% of enterprise security budgets will include dedicated funding for AI refactoring testing, up from 29% in 2023.Here’s what’s on the horizon:

- GitHub Project Shield (private beta): Real-time security equivalence checks during AI code generation. If the AI suggests a change that breaks a security rule, it warns you before you accept it.

- Google’s SECTR (Q2 2025): AI that generates its own security test cases based on code changes. No manual writing needed.

- OpenSSF Working Group: Google, Microsoft, and IBM are building open standards for AI security regression testing. Expect unified frameworks by mid-2025.

Even Anthropic’s research shows that feeding security regression test results back into AI training reduces code vulnerabilities by 63%. That’s the future: AI that learns from its own mistakes.

Who Needs This Most

If you’re in finance, healthcare, government, or any industry with strict compliance rules, you’re already behind if you’re not doing this. But even startups using AI to scale fast need to pay attention. One fintech startup skipped security regression testing, trusted their AI to "clean up" their auth service-and got breached through a forgotten OAuth scope check. They lost $2.3 million in customer refunds and shut down within 6 months.Teams with over 1,000 developers are running 3.2x more security regression tests than smaller teams. Why? Because they’ve seen the cost. The rest are just learning the hard way.

Final Reality Check

AI isn’t replacing developers. But developers who use AI without security regression testing are replacing themselves-with a breach.You don’t need to be a security expert to start. You just need to care enough to add one more step: Before you merge that AI-generated PR, ask: "What did this change about how we protect data?" Then test it.

The tools are here. The data is clear. The cost of inaction is rising. If your team is using AI to refactor code, you’re already in the game. The question isn’t whether you should do security regression testing. It’s whether you’ll do it before the next incident hits.

Michael Jones

January 15, 2026 AT 01:20AI doesn't care if your code is secure it just wants to look clean

That's the problem with outsourcing thinking to machines

You get faster code but slower awareness

Security isn't a feature it's a habit

And habits need discipline not automation

We're trading control for convenience and pretending it's progress

It's not

It's just a slower kind of suicide

And the worst part? We know it

allison berroteran

January 16, 2026 AT 23:20I've seen this happen in three different teams now and it's always the same story

Someone says 'the AI cleaned it up' and everyone nods because the tests pass

But no one checks the permission chains or the token expiration logic or the way the new hash function handles salts

It's not that people are lazy it's that they're overwhelmed

We're being asked to trust tools we don't understand to fix things we barely remember how to secure

And when it breaks we act shocked like it was a surprise

It wasn't

The warning signs were all there in the code reviews we skipped

We just didn't want to admit we needed to learn a new kind of vigilance

Maybe the real solution isn't better tools but better humility

Gabby Love

January 17, 2026 AT 00:15Just a quick note: SonarQube 9.9's AI pattern detection is solid but it misses edge cases where multiple refactors compound. Always pair it with manual review of auth flows. Also, OWASP's AI guide v1.2 has a template for JWT audience checks that saved my team last quarter.

Jen Kay

January 18, 2026 AT 13:02It's almost poetic how we've built an entire industry on automating away responsibility

First we automated testing

Then we automated deployment

Now we're automating security

And somehow we're surprised when the house burns down

Let's be real - no AI is going to understand why a role check matters if you don't teach it

And no amount of CI/CD gates will fix a culture that treats security as a checkbox

Maybe instead of more tools we need to stop pretending that efficiency and safety can be separated

It's not a technical problem

It's a moral one

Michael Thomas

January 18, 2026 AT 20:20USA leads in AI security because we don't coddle developers. Fix your code or get replaced. Simple.