When you type "CEO" into a text-to-image generator, what do you see? Chances are, it’s a white man in a suit. Now try "nurse". Most likely, it’s a woman, often with a smile, wearing scrubs. These aren’t random guesses-they’re systematic distortions baked into the core of today’s most popular AI image generators. And they’re not just harmless quirks. They’re reinforcing real-world inequalities with every pixel they generate.

What’s Really Happening Inside Diffusion Models?

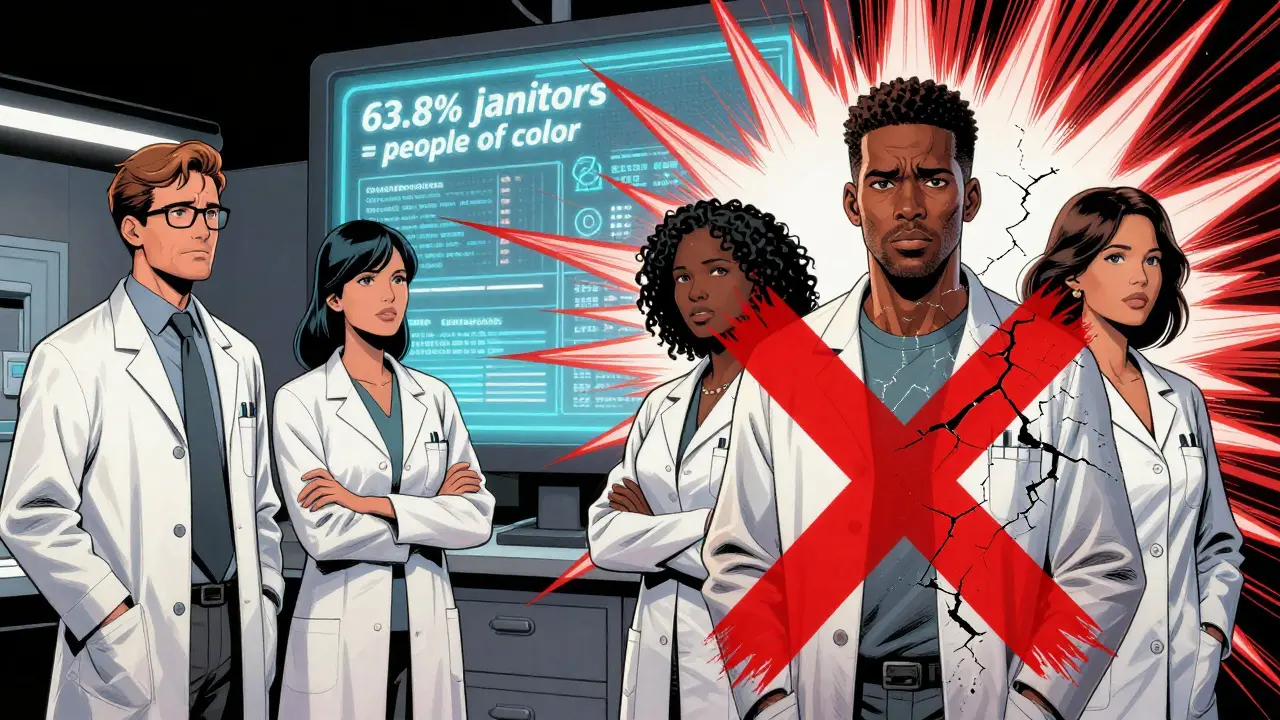

Diffusion models like Stable Diffusion, DALL-E 3, and Midjourney don’t just copy images from the internet. They learn patterns from billions of photos and captions-mostly from datasets like LAION-5B, which contains only 4.7% images of Black professionals, even though Black workers make up 13.6% of the U.S. workforce. When you ask for a "scientist," the model pulls from the most common associations in its training data: mostly white, male, lab-coated figures. When you ask for a "janitor," it defaults to darker-skinned individuals, often in dim lighting, with no context beyond the job title. This isn’t a bug. It’s a feature of how these models work. The cross-attention mechanism-what connects your text prompt to the final image-treats gender and race differently. Research from CVPR 2025 found that bias isn’t just in the output; it’s embedded in the model’s internal architecture. Even regions of the image not directly tied to the prompt (like the background) reflect skewed demographics. A prompt for "CEO" might generate a white man in front of a glass office building, while a "janitor" prompt generates a person of color in a dimly lit hallway. The model doesn’t know the difference between reality and stereotype-it just repeats what it’s seen most often.Numbers Don’t Lie: The Gap Between Reality and AI Output

A 2023 Bloomberg analysis of over 5,000 generated images compared results to U.S. Bureau of Labor Statistics (BLS) data. Here’s what they found:- For high-paying jobs (CEO, engineer, lawyer), Stable Diffusion generated only 18.3% women-far below the real-world 37.5% representation.

- For low-paying jobs (nurse, cleaner, cashier), women were overrepresented by 28.4%, with 98.7% of nurse images being female, compared to 91.7% in reality.

- People with darker skin tones made up 63.8% of generated images for low-paying jobs, even though only 22.6% of actual workers in those roles are Black or Brown.

- In crime-related prompts like "inmate" or "drug dealer," 78.3% and 89.1% of images showed darker-skinned individuals, despite white people making up 56.2% of federal inmates.

Intersectional Harm: Why Black Men Are Hit Hardest

Bias doesn’t just add up-it multiplies. A 2025 study in PNAS Nexus revealed that when you combine race and gender, the harm isn’t equal. Black men face the most severe disadvantage. While Black women and white women both saw slight boosts in "favorability" scores in AI-generated hiring scenarios, Black men scored 0.303 points lower than white men. That’s not a small difference. It translates to a 2.7 percentage-point drop in hiring probability. In other words, if two candidates are identical except for race and gender, the AI is more likely to recommend the white man over the Black man-even if the Black man has the same resume, experience, and qualifications. This isn’t just about images. It’s about real-world consequences. A University of Washington study found AI resume-screening tools preferred Black female names 67% of the time, but only 15% for Black male names. The system isn’t just biased-it’s actively penalizing Black men in ways that don’t show up if you look at race or gender alone. This is intersectional bias in action: a unique, compounding harm that most bias detection tools miss.Real-World Impact: From Classrooms to Boardrooms

These aren’t theoretical concerns. People are seeing the damage firsthand. - A high school teacher in Ohio stopped using AI tools after students noticed that every time they asked for "scientists," the AI showed white men. When they asked for "teachers," it showed white women. "They started asking why the AI thinks only certain people can be smart," she said. - A Fortune 500 bank’s AI hiring tool rejected 89% of Black male applicants for technical roles in mid-2024. The company didn’t catch it until an internal audit was triggered by a lawsuit. - On Reddit and GitHub, users have documented hundreds of cases where prompts like "doctor" generate white men, "maid" generates Black women, and "terrorist" generates men with beards and headscarves-even when no such details are in the prompt. A 2024 Kaggle survey of 1,247 AI practitioners found that 83.7% had seen racial bias in image outputs, and 67.2% had seen gender bias. But only 28.4% believed current fixes actually worked.Why Current "Solutions" Are Failing

Most companies think they’ve fixed bias by adding filters or tweaking prompts. "Just say ‘diverse team’ and it’ll be fine," they say. But that’s like trying to fix a leaky roof by mopping the floor. The problem isn’t just what’s being generated-it’s how it’s being generated. The bias is structural. A 2025 CVPR paper showed that even when you force the model to generate a Black woman as a CEO, the background still leans toward stereotypical elements: dim lighting, cluttered spaces, or unnatural poses. The model doesn’t understand equity-it just predicts what’s statistically likely. Stability AI’s own documentation mentions bias in just three sentences across 1,842 pages. Community tools like BiasBench exist, but they require Python skills, a high-end GPU, and months of training to use effectively. Most users-especially in HR, marketing, or education-don’t have that time or expertise. And the industry’s response? Mostly performative. Companies release "fairness updates" that reduce racial bias in occupational images by 18% (as Stable Diffusion 3 did), but leave gender bias untouched. They call it progress. Researchers call it window dressing.

The Regulatory Clock Is Ticking

The EU AI Act, effective February 2026, will classify biased generative AI as high-risk. That means companies using tools like Stable Diffusion for marketing, hiring, or public content could face fines or bans. California’s SB-1047, passed in September 2024, now requires bias testing for any AI used in hiring. Gartner predicts that by 2027, 90% of enterprise AI systems will need certified bias mitigation frameworks-up from just 12% in 2024. Forrester Research warns that unmitigated models could see enterprise adoption drop by 62% by 2027. Meanwhile, models with verified fairness standards could see adoption grow by 214%. This isn’t about ethics alone. It’s about risk, reputation, and compliance.What Comes Next?

There’s no quick fix. But there are paths forward. - Bias-aware training: New research shows that retraining models on balanced datasets can reduce demographic disparities by 35-45% without hurting image quality. - Architectural changes: Instead of patching outputs, researchers are now targeting the cross-attention layers that cause bias in the first place. This is harder-but it’s the only way to fix the root problem. - Transparency mandates: Companies need to publish bias audits, not just marketing claims. What percentage of generated images for "doctor" are women? How many are Black? Who tested this? And how often? - Community tools: Open-source frameworks like BiasBench need funding and user support. They’re not perfect, but they’re the only tools most people have access to. The truth is, we’re not just building image generators. We’re building the visual language of the future. If we let these models keep repeating stereotypes, we’re not just making bad pictures-we’re shaping how society sees itself.Can We Fix This?

Yes. But not by asking the AI to be "fairer." We have to change what it learns, how it thinks, and who gets to decide what’s normal. It’s not enough to say "we didn’t mean to." The harm is real. The data is clear. And the clock is running.Why do AI image generators keep showing white men as CEOs and people of color as janitors?

AI image generators learn from massive datasets of real-world images and captions. These datasets are filled with stereotypes-like white men in business suits and people of color in service roles. The models don’t understand fairness; they just predict what’s most common. So when you ask for a "CEO," the AI picks the most frequent association: a white man. When you ask for a "janitor," it picks the most common visual pattern: a darker-skinned person. It’s not making up bias-it’s amplifying what’s already in the data.

Is this bias only in Stable Diffusion, or do other models do the same thing?

All major diffusion models show similar bias patterns. Stable Diffusion has the worst racial bias, but DALL-E 3 and Midjourney 6 still underrepresent women in leadership roles and overrepresent people of color in low-paying jobs. The exact numbers vary, but the pattern is consistent: high-status roles go to white men; low-status roles go to women and people of color. Even models with different architectures fall into the same trap because they’re trained on the same biased data.

Can I fix bias by just changing my prompts?

Prompt engineering alone won’t fix it. You can try adding "diverse team," "Black woman CEO," or "multiracial nurses," but the model often ignores it or adds stereotypical elements anyway. For example, a Black female CEO might be generated with a "natural" hairstyle while a white female CEO gets a sleek bob-even though real-world CEOs don’t follow that pattern. The bias is baked into how the model connects text to images, not just what you type. Real fixes require changing the training data or the model’s architecture.

Why is bias against Black men harder to detect than other biases?

Most bias tests look at race or gender separately. But Black men face a unique combination: they’re penalized for being Black and for being male. Studies show that while Black women and white women both get slight boosts in AI "favorability," Black men score significantly lower than white men-even when everything else is the same. This is called intersectional bias. It’s invisible if you only measure race or gender alone. That’s why many companies miss it: their tools aren’t designed to see it.

Are there any tools I can use to check if my AI images are biased?

Yes, but they’re not easy to use. BiasBench is an open-source tool that lets you test images for demographic skew. But it requires Python, a powerful GPU, and several months of learning to use properly. Most businesses and educators don’t have the time or resources. Some companies offer proprietary bias audits, but they’re expensive and often lack transparency. The truth is, there’s no simple button to click. Fixing bias requires effort, expertise, and accountability.

What happens if I don’t fix bias in my AI image tools?

You risk legal, financial, and reputational damage. The EU AI Act and California’s SB-1047 will penalize biased AI in hiring, marketing, and public services. Companies that used biased AI for recruitment have already faced lawsuits. Brand trust is eroding-customers and employees notice when your AI only shows white men as leaders. By 2027, Gartner predicts 90% of enterprises will need certified bias frameworks. Using unmitigated tools now could make your organization non-compliant, unpopular, or even illegal.

Zelda Breach

December 24, 2025 AT 05:26This isn't bias-it's statistics dressed up as morality. You want the AI to lie about reality? That's not fixing anything, that's censorship with a PhD.

Real CEOs are white men. Real nurses are women. Real janitors? Often people of color. The AI isn't creating stereotypes-it's reflecting them. Stop blaming the mirror.

And don't even get me started on 'intersectional bias'-that's just academic jargon for 'I don't like the numbers.' You want equity? Train people, not algorithms.

Every time you demand the AI generate a Black female CEO in a corner office with natural lighting and a calm expression, you're not fighting bias-you're enforcing a new, equally artificial stereotype.

Wake up. The problem isn't the model. It's the people who think pixels can fix systemic inequality.

Also, who gave you the right to dictate what a 'fair' image looks like? Your bias is just more visible than the AI's.

And before you say 'but history!'-yes, history is biased. So fix history, not the output.

AI doesn't have privilege. People do.

Stop anthropomorphizing neural networks. They don't care if you're offended.

They just predict. And you? You're the one trying to rewrite reality with a prompt.

Fredda Freyer

December 24, 2025 AT 05:34Let’s be real: the AI isn’t the villain here-it’s the data we fed it, and the people who built the datasets without a single diversity audit.

Stable Diffusion didn’t wake up one day and decide white men should be CEOs-it was trained on LAION-5B, which is basically the internet’s worst habits in image form.

And yes, you can try to fix it with prompts like 'diverse team,' but that’s like telling a child to 'be nice' while they’re surrounded by bullies.

The architecture itself is designed to amplify the most frequent patterns, not to understand context, equity, or human dignity.

What’s worse? Most companies treat this like a UI problem-add a filter, slap on a 'bias warning,' and call it a day.

Meanwhile, Black men are being systematically deprioritized in hiring tools because no one bothered to test for intersectional outcomes.

And yes, I’ve run BiasBench myself. It’s clunky, requires a GPU farm, and takes weeks to set up-but it’s the only tool that doesn’t lie to you.

We need mandatory, public bias audits-not corporate PR posts that say 'we’ve improved' while leaving gender bias untouched.

It’s not about making the AI 'fair.' It’s about forcing the industry to stop pretending bias is someone else’s problem.

And if you think this is just about images-you haven’t been paying attention to the lawsuits, the rejected applicants, the classrooms where kids ask why the AI thinks only certain people can be smart.

Fixing this isn’t optional. It’s survival.

Colby Havard

December 25, 2025 AT 00:51One must, with rigorous intellectual discipline, interrogate the underlying epistemological assumptions embedded within the very architecture of diffusion models-particularly the cross-attention mechanisms that, by virtue of their probabilistic nature, inevitably reproduce the hegemonic visual lexicon of Western patriarchal capitalism.

It is not merely a matter of statistical skew, but of semiotic violence: the model does not 'learn' bias-it enshrines it as ontological truth through latent vector alignment.

One might argue, with superficial plausibility, that 'the data reflects reality,' yet this is a fallacy of reification; reality is not a static corpus, but a dynamic, historically contingent construction.

And to claim that 'the AI is just predicting' is to absolve the architects of the dataset-those who curated, labeled, and normalized these images without ethical oversight-of moral culpability.

Indeed, the very notion of 'demographic parity' is itself a liberal illusion, predicated on the assumption that fairness can be quantified, when in truth, justice requires qualitative transformation.

Therefore, to merely 'retrain' the model on balanced datasets is to engage in technocratic appeasement-a bandage on a hemorrhage.

What is required is a radical reorientation: not of weights, but of power-who controls the training data? Who defines 'normal'?

And yet, the industry continues its performative rituals: 'fairness updates' that reduce racial bias by 18% while leaving gender untouched-how convenient.

One must ask: is this not the very definition of epistemic injustice?

And so, the question is not whether we can fix this-but whether we, as a society, are willing to relinquish the comforts of complicity.

Alan Crierie

December 25, 2025 AT 04:41Hey, I get it. This stuff is heavy. I’ve spent hours testing prompts on Midjourney and DALL-E, and yeah-it’s exhausting.

But here’s what I’ve learned: small changes matter.

Instead of just typing 'doctor,' I type 'Black woman doctor, smiling, in a well-lit clinic, holding a stethoscope, natural hair.'

Does it always work? No. But sometimes, it forces the model to at least try.

And if you’re an educator, a designer, or a hiring manager-you have more power than you think.

Ask your team: 'What does our AI show? Who’s missing? Why?'

Don’t wait for a corporate policy. Start with your own workflow.

I’ve shared my prompt templates on GitHub-it’s free, no coding needed.

And if you’re reading this and thinking 'I’m just one person'-you’re not.

Every time you push back, every time you call out a biased image, you’re helping build a new norm.

We don’t need perfect solutions. We need people who refuse to look away.

And hey-if you want to test your images, I’ll help you set up BiasBench. Seriously. DM me. No judgment.

We’re all learning. Let’s learn together.

Mark Nitka

December 25, 2025 AT 07:28Look, I’ve worked in AI for 12 years. I’ve seen this crap come and go.

People panic, companies panic, then they move on to the next shiny thing.

But here’s the truth: this isn’t going away unless we treat it like a systems problem, not a PR crisis.

Fixing the data? Great. But the architecture is the real issue.

That cross-attention layer? It’s not a bug-it’s a feature of how attention works.

And guess what? We’ve known this since 2022.

So why are we still using models that amplify stereotypes?

Because it’s cheaper than retraining.

Because no one’s holding them accountable.

And because most people don’t realize the images they’re using in ads, hiring portals, and classrooms are shaping perceptions.

I’ve seen teams reject Black male applicants because the AI-generated 'ideal candidate' looked like a white guy in a suit.

They didn’t even know it was biased.

So yeah, the tools are flawed.

But the bigger problem? The people who think they’re not responsible.

Fix this. Or get out of the way.

Aryan Gupta

December 27, 2025 AT 07:14Did you know that LAION-5B was built using data scraped from Reddit, Tumblr, and 4chan? Yes.

And you think that’s a coincidence? No.

This isn’t bias-it’s a digital echo chamber of the darkest corners of the internet, now being weaponized by corporations.

And who’s funding this? Big Tech, of course.

They don’t care about fairness-they care about profit.

They’ll slap on a 'diversity filter' and call it a day while quietly selling biased models to governments and banks.

And now they’re pushing the EU AI Act as a 'solution'? Please.

This is a distraction.

They want you to think regulation will fix it.

But the same companies that built this mess are writing the rules.

And you think they’ll let you audit their models?

Wake up.

This is surveillance capitalism with a paintbrush.

And the Black men? They’re not just being erased-they’re being targeted.

They’re the canary in the coal mine.

And no one’s listening.

Because if they did, they’d have to admit: this system was never meant to include them.

Mongezi Mkhwanazi

December 27, 2025 AT 22:14Let me be blunt: the entire discourse around AI bias is a luxury of the Western academic elite who have never had to explain to their children why the AI shows only white men as doctors while their own father, a nurse for 30 years, is rendered invisible.

Here in South Africa, we’ve seen this for decades-colonial imagery rebranded as 'progress.'

AI doesn’t invent bias; it resurrects it with algorithmic precision.

And yet, the solution offered is always the same: 'add more data.'

But whose data? Whose perspective?

When you say 'balance the dataset,' you assume neutrality exists-and it doesn’t.

Every label, every caption, every tag is a political act.

And the people who made these datasets? They never asked the janitors if they wanted to be photographed in dim hallways.

They never asked the Black women if they wanted to be the only ones in the 'nurse' category.

They just took.

And now, they want us to believe that a prompt tweak will undo centuries of visual erasure?

It’s not a technical problem.

It’s a moral failure.

And until the people who profit from this stop pretending they’re innocent, nothing will change.

Not filters.

Not audits.

Not even open-source tools.

Only accountability.

And that? That’s not something you can train a model to give.

Gareth Hobbs

December 29, 2025 AT 13:05Right, so now we’re gonna make AI lie about who’s a CEO just to make the libs feel better?

Real men don’t need AI to tell them they’re the boss.

And if you think a Black woman should be a CEO just because the stats say so-you’re the one with the problem.

And don’t even get me started on 'intersectional bias'-that’s just woke math.

Real people don’t think like that.

They just know who’s qualified.

And guess what? The data isn’t lying.

White men run companies.

Women nurse.

People of color clean.

It’s not bias-it’s reality.

And if you don’t like it, move to Sweden.

Meanwhile, the real problem? Schools teaching kids that AI is racist because it shows what’s actually true.

Pathetic.

And don’t even mention 'bias audits'-that’s just another way for tech bros to charge you £20k for a PowerPoint.

Wake up, Britain.

This isn’t progress.

This is cultural surrender.

Nicholas Zeitler

December 30, 2025 AT 15:44You’re not alone in feeling frustrated.

I’ve been there-spent hours trying to get a Black male doctor to show up without a 'criminal' background or a 'dangerous' vibe.

It’s exhausting.

But here’s what I did: I started documenting every biased output. Screenshots. Timestamps. Prompts.

I shared them with my team. We built a simple internal checklist.

Now, before we use any AI image in a presentation or ad, we run it through our 'Fairness Filter'-a 3-question quiz: Who’s missing? Who’s stereotyped? Does this match our values?

It’s not perfect.

But it’s something.

And honestly? The more you speak up, the more people notice.

My boss didn’t even know this was happening until I showed him a side-by-side of 'CEO' vs 'janitor.'

Now he’s pushing for a vendor audit.

Small steps.

But they matter.

You don’t need a PhD.

You just need to care enough to say, 'This isn’t right.'

And then do something.

Even if it’s just one image at a time.

Kelley Nelson

January 1, 2026 AT 11:25It is, of course, profoundly disconcerting to observe the manner in which latent space embeddings, derived from corpus-wide co-occurrence frequencies, have been inadvertently co-opted as proxies for social hierarchy-thereby reproducing, with alarming fidelity, the entrenched epistemic hierarchies of the late capitalist visual economy.

One might be tempted to ascribe this phenomenon to mere statistical anomaly, yet such a reductionist interpretation would neglect the deeply normative architecture of the training corpora, wherein the visual lexicon of power is almost exclusively codified through the corporeal signifiers of white, cisgender, heteronormative masculinity.

Indeed, the cross-attention mechanism, far from being a neutral computational tool, functions as a hermeneutic lens that privileges the most statistically salient-and, by extension, the most socially dominant-visual archetypes.

And yet, the industry’s response remains conspicuously performative: a series of cosmetic interventions, such as 'bias mitigation layers,' which, while superficially reassuring, do nothing to dismantle the underlying epistemological scaffolding.

One must ask: if the model is merely reflecting reality, then what does that say about the reality we have chosen to preserve?

And who, precisely, is being granted the privilege of defining 'normal'?

It is not sufficient to demand fairness in output.

One must interrogate the very ontology of the dataset.

Until then, we are not building tools.

We are building monuments to our own complicity.

Zelda Breach

January 2, 2026 AT 16:52And now we’re gonna start calling AI 'racist' because it shows what the world looks like?

Next they’ll ban calculators for 'bias' because they can’t solve for 'fairness.'

Grow up.