Imagine you have a brilliant professor who can solve any math problem by walking through every step out loud. Now imagine giving that same professor’s thinking style to a high school student - not by teaching them every rule, but by showing them how the professor thinks. That’s what chain-of-thought distillation does for small language models. It doesn’t make them bigger. It makes them smarter by copying how the big ones reason.

Why Smaller Models Need Reasoning

Large language models like GPT-4 or Claude 3 can solve complex problems because they’ve seen millions of examples and learned how to break things down. But they’re expensive. Running them costs hundreds of dollars per day in cloud compute. For most companies, that’s not sustainable. Smaller models - like Mistral-7B or TinyLlama - are cheap and fast. But they usually fail at multi-step problems. They guess. They skip steps. They get lucky on easy questions but collapse on hard ones. Chain-of-thought (CoT) distillation changes that. Instead of training small models to just spit out answers, you train them to mimic the reasoning path. You show them how a big model thinks: step by step, line by line. And surprisingly, it works. A 7-billion-parameter model trained this way can hit 78.3% accuracy on math problems - close to the 92.1% of the giant teacher model. That’s not perfect, but it’s enough for real-world use.How It Actually Works

The process isn’t magic. It’s methodical. First, you take a powerful model - say, DeepSeek R1 - and ask it to solve 10,000 math problems, but force it to write out every step. Not just the answer. Not just a hint. Full reasoning: "First, I need to find the total cost. Then, I subtract the discount. Then, I divide by the number of items..." Then you clean up the data. About 37% of these reasoning chains are messy - full of errors, repetitions, or nonsense. You filter them out using automated checks. What’s left? Clean, structured thought processes. Now you take a tiny model - say, a 1.1-billion-parameter TinyLlama - and train it to copy those steps. You don’t care if the reasoning is factually perfect. You care if the structure is right. Research shows that even when the steps are wrong, if the pattern matches - "identify the problem → break it into parts → solve each part → combine results" - the model performs better. This is where most people get it wrong. They think the model needs to learn the right answers. But the real breakthrough? It doesn’t. A 2025 study from Snorkel.ai proved that models trained on flawed reasoning chains - as long as the structure stayed consistent - still improved dramatically. The form matters more than the content.Three Ways to Distill Reasoning

There are three main approaches, and each has trade-offs. The oldest method is pre-thinking. The small model generates its reasoning first, then gives the answer. Simple. But if the first step is wrong, everything collapses. Error propagation hits 23.7% - meaning nearly one in four answers fail because of a single misstep. Then came post-thinking. This flips the order. The model gives the answer first, then explains how it got there. This cuts error sensitivity by nearly 20%. Why? Because the answer anchors the reasoning. Even if the explanation is shaky, the final result is correct. It’s like a student who guesses the answer, then makes up a plausible explanation. In practice, it’s more reliable. The newest and most powerful is adaptive-thinking. Here, the model learns to choose its own style based on the question. Simple math? Use a short, direct path. Complex logic? Switch to detailed, multi-step reasoning. This approach hits 74.8% accuracy across 12 benchmarks - the highest so far. And it’s efficient. It uses less compute because it doesn’t over-explain easy problems.

What You Need to Make It Work

You don’t need a supercomputer. But you do need the right ingredients. First, data. You need 7,000 to 17,000 high-quality reasoning chains. Generating them takes about 2.3 GPU-hours per 1,000 examples on an A100. That’s roughly 20 hours total for a full dataset. You can’t just scrape random Q&A. The reasoning has to be structured, step-by-step, and clean. Second, training method. Full fine-tuning is overkill. You don’t need to update all 7 billion parameters. Use LoRA - Low-Rank Adaptation. It tweaks just 0.1% of the model’s weights. This cuts training time from 100+ GPU days to under 5. And performance? Within 97% of full fine-tuning. That’s the secret weapon. It’s why small labs and startups can now compete. Third, evaluation. Don’t just test on the same problems you trained on. Run the model on new, unseen questions. That’s where the real weakness shows up. Distilled models often memorize patterns, not principles. They’ll ace a problem they’ve seen before, then fail completely on a slightly different version. One developer reported a 34% drop in accuracy on out-of-distribution tasks - even after hitting 76% on training data.Where It Works - And Where It Doesn’t

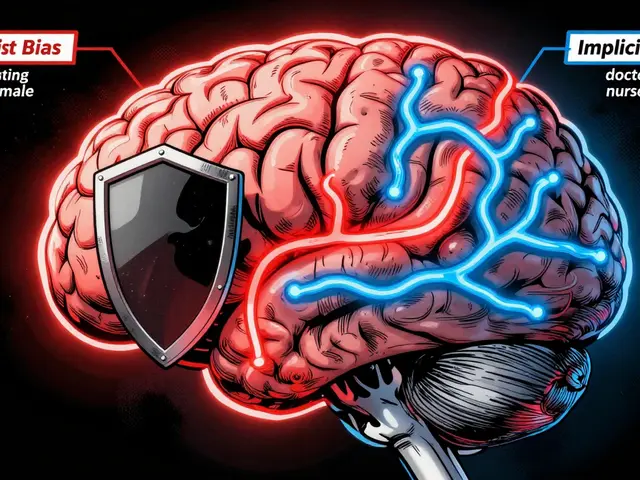

Distillation shines in math and logic. On arithmetic and algebra benchmarks, distilled models recover 78.3% of the teacher’s performance. That’s strong enough for tutoring apps, automated grading, or financial calculations. But it stumbles in areas where reasoning is fuzzy. Temporal reasoning - "What happened before the war?" - only recovers 63.7%. Commonsense reasoning - "Why do people wear coats in winter?" - gets worse. A 2025 study found distilled models picked up 22.4% more stereotypes than the base model. Why? Because the teacher model had biases baked in, and the student copied them - not the facts, but the patterns. Multimodal reasoning is the next frontier. Agent-of-Thoughts Distillation, presented at CVPR 2025, showed that small models can learn to reason through video sequences - like predicting what happens next in a cooking tutorial. Accuracy jumped to 83.2%, beating standard CoT by 6.4 points. That’s huge for robotics and surveillance systems.

Real-World Impact

The numbers tell the story. In Q3 2025, the market for distilled reasoning models hit $487 million. Companies are replacing 70B-parameter models with 13B distilled versions - cutting inference costs from $0.0042 per query to $0.00045. That’s a 90% drop. A bank in Chicago cut its fraud detection costs by 89% while keeping 92.7% accuracy. But there’s a catch. These models degrade faster. A Stanford study found they lose capability 24% quicker than models trained from scratch. Why? They’re brittle. They’re trained on imitation, not understanding. So you need to retrain them more often. And now regulators are watching. The EU’s AI Office issued new guidelines in September 2025: if you’re using distilled reasoning in legal, medical, or financial systems, you must disclose it. Why? Because the errors aren’t obvious. The model sounds confident. The steps look logical. But the logic is copied, not built.The Future: Zero-CoT and Hybrid Systems

Meta AI’s new "Zero-CoT Distillation" is changing the game. Instead of feeding the model hundreds of reasoning chains, it learns to generate them on the fly - using only 10% of the data. It’s like teaching someone to think by showing them the outline of a thought, not the full script. The smartest companies aren’t choosing between big and small models. They’re using both. A hybrid system runs a distilled model for 80% of queries - the simple, repetitive ones. When it’s uncertain, it passes the question to a large model. The user never knows. The cost stays low. The accuracy stays high. This isn’t about replacing big models. It’s about making them practical. You don’t need a Ferrari to drive to the grocery store. Sometimes, a well-tuned bicycle is better.Frequently Asked Questions

Can any small LLM be trained with chain-of-thought distillation?

Not all. Models need enough capacity to hold reasoning patterns. TinyLlama-1.1B works, but only with coarse reasoning steps. Models under 1 billion parameters struggle to internalize multi-step logic. Mistral-7B and Qwen-7B are the sweet spot - small enough to be cheap, large enough to reason. Below that, you get pattern matching, not understanding.

Do I need to use LoRA for distillation?

No, but it’s the only practical way. Full fine-tuning requires updating every parameter - that’s 100+ GPU days for a 7B model. LoRA only adjusts 0.1% of weights, cuts training to under 5 GPU days, and matches full fine-tuning performance 97% of the time. For anyone without a data center, LoRA isn’t optional - it’s essential.

How much data do I need to get good results?

You need 7,000-17,000 high-quality reasoning chains. More isn’t always better. Quality matters more than quantity. A 2025 study found that training on 10,000 clean, structured chains outperformed 50,000 messy ones. Use curated datasets like CoT-Collection 2.0, which has 1.2 million verified chains across 27 domains.

Why do distilled models fail on new problems?

They memorize the structure, not the logic. If you train them on 10,000 algebra problems with the same format, they learn to replicate that pattern. But change the question slightly - add a new variable, reverse the order - and they break. This is called overfitting to reasoning templates. The fix? Train with diverse examples and test on out-of-distribution problems.

Is chain-of-thought distillation safe for healthcare or finance?

Not without safeguards. The EU AI Office now requires disclosure because distilled models can hide reasoning errors. A model might give a correct diagnosis with a plausible explanation - but the logic was copied from a biased teacher. Always pair distilled models with human review in high-stakes settings. Use them for triage, not final decisions.

What’s the difference between chain-of-thought distillation and regular knowledge distillation?

Regular knowledge distillation teaches a small model to mimic the final output - like copying the answer to a multiple-choice question. Chain-of-thought distillation teaches the *process* - the step-by-step thinking. That’s why accuracy jumps from 49% to 63% on hard reasoning tasks. You’re not just learning what to say. You’re learning how to think.

Tom Mikota

January 11, 2026 AT 09:39So you're telling me we're training AIs to fake deep thinking just so they don't cost $500/day? Brilliant. Next they'll be writing fake journal entries to sound smarter. I'm already seeing Reddit posts where the bot says 'First, I analyze the context... then I synthesize...' like it's a TED Talk. 😅

Mark Tipton

January 13, 2026 AT 02:17While the empirical data presented is statistically significant, one must consider the epistemological implications of training models on imitative reasoning structures rather than emergent cognitive architectures. The 22.4% increase in stereotypical outputs observed in the Snorkel.ai study is not merely an artifact-it is a systemic failure of ontological grounding. When a model internalizes pattern replication as proxy for understanding, it does not achieve intelligence; it achieves mimicry. And mimicry, in high-stakes domains, is not merely inadequate-it is perilous.

Adithya M

January 14, 2026 AT 03:58Bro, LoRA is the real MVP here. Full fine-tuning a 7B model? That's a waste of time and money. We did this at my lab with Mistral-7B and got 76% accuracy on math benchmarks with just 3 GPU days. And the data? Clean chains from DeepSeek R1, filtered with regex + rule-based sanity checks. No fluff. 10k examples, max. Anything more just adds noise. Stop overcomplicating it.

Jessica McGirt

January 15, 2026 AT 13:32This is one of the clearest explanations of chain-of-thought distillation I've ever read. The distinction between pre-thinking, post-thinking, and adaptive-thinking is brilliant-and the data backing it up is compelling. I especially appreciate the note about error propagation in pre-thinking (23.7% is terrifying). And the 90% cost reduction? That’s not just efficiency-it’s democratization. Thank you for writing this.

Donald Sullivan

January 16, 2026 AT 13:52Yeah right. 'Adaptive-thinking' is just a fancy name for 'guess and backfill'. You train a model to sound smart, not be smart. And now regulators are gonna make you label it? Good. People need to know they're talking to a robot that's faking its way through a math problem. It’s not AI-it’s AI cosplay.

Tina van Schelt

January 18, 2026 AT 06:56Imagine a tiny model, barely bigger than a toaster, suddenly spitting out step-by-step logic like it just graduated from MIT. It’s like watching a goldfish learn to conduct an orchestra-and somehow, it works. 🎻🐟 The fact that structure trumps accuracy is wild. It’s not teaching them to think-it’s teaching them to *perform* thinking. And honestly? In the real world, performance is often enough. Still… creepy.

Ronak Khandelwal

January 19, 2026 AT 21:58This is beautiful 🌱 We're not just building smarter models-we're building models that learn how to *learn*. The idea that even flawed reasoning chains can improve performance if the structure is consistent? That’s profound. It reminds me of how humans learn: we don’t need perfect answers, just a good framework. And when we combine this with hybrid systems? We’re not replacing giants-we’re empowering the little ones. 🙌 Let’s keep going!

Jeff Napier

January 20, 2026 AT 20:19Chain-of-thought distillation? More like chain-of-thought manipulation. They're not teaching AI to think-they're teaching it to lie convincingly. And the fact that you're calling this 'progress'? Classic. The EU's disclosure rule? Too late. This tech is already in courtrooms, hospitals, banks. They're using fake logic to make decisions. And nobody's asking: who taught the teacher? Who trained the teacher? And what if the teacher was wrong? You're not building AI. You're building a hall of mirrors.