Imagine you’re running a customer support chatbot for an e-commerce site. You want it to quickly flag abusive messages, categorize product questions, and pull out order numbers-all in under 100 milliseconds. Now imagine you try to do that with a giant LLM like GPT-4. It takes 1.5 seconds per message. Users start leaving. Your team gets angry. Your boss asks why you didn’t just use something simpler.

This isn’t science fiction. It’s real. And it’s happening every day in companies trying to cut costs or chase the latest AI hype. The truth? NLP pipelines and end-to-end LLMs aren’t rivals. They’re teammates. The question isn’t which one is better. It’s: when do you build a pipeline, and when do you just prompt a model?

What’s Actually Happening Under the Hood

NLP pipelines are like assembly lines. Each step does one job: tokenize the text, tag parts of speech, find names and places, check sentiment, then route the result. Think of it like a factory where each worker has one tool and one task. You chain them together. If one worker messes up, you fix that one station. You don’t shut down the whole line.

LLMs are more like a genius intern who can do everything-but only if you ask them the right way. Give them a prompt like, “Classify this review as positive or negative,” and they’ll try. But they might also add commentary, make up facts, or change their mind if you ask again. They’re flexible. They’re slow. And they’re expensive.

Here’s the numbers: a simple NLP pipeline using spaCy can process 4,000 text snippets per second on a $50/month cloud server. An LLM like GPT-3.5 doing the same task? Around 150 per second. And it costs 20 to 100 times more per request. That’s not a trade-off-it’s a financial disaster if you’re handling thousands of requests daily.

When to Build a Pipeline (And Why You Should)

You should use an NLP pipeline when you need speed, consistency, and low cost. That’s most business tasks.

- Filtering hate speech in live chat

- Extracting product SKUs from customer emails

- Classifying support tickets into predefined categories

- Validating addresses or phone numbers in forms

These aren’t hard problems. They’re repetitive. And they need to work every single time. A pipeline built with spaCy or NLTK can hit 93% accuracy on these tasks with minimal training. It doesn’t hallucinate. It doesn’t drift. It doesn’t cost $50/hour to run.

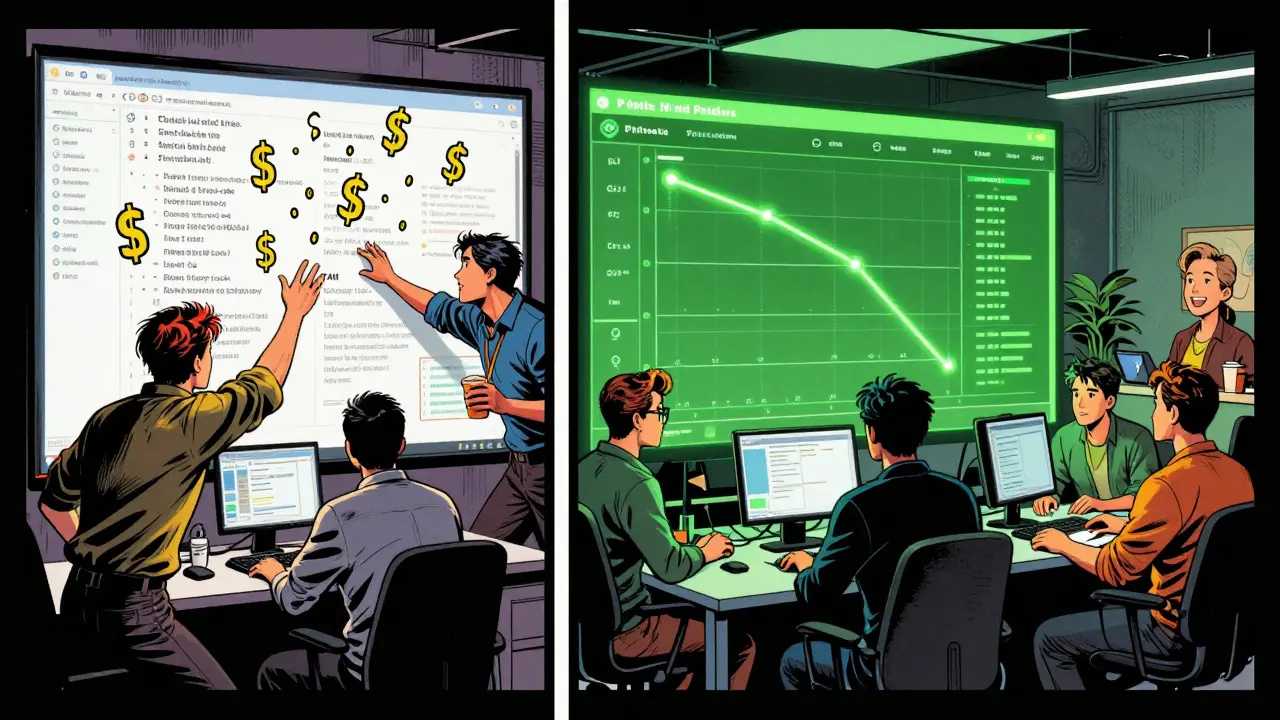

One company in Asheville-let’s call them LocalRetail-used to run an LLM for tagging product reviews. They spent $1,200/month. Accuracy? 88%. Then they switched to a pipeline with custom rules for keywords like “broken,” “leaked,” and “too small.” Cost dropped to $180/month. Accuracy went up to 94%. Why? Because they knew exactly what words meant what. No need for a giant model to guess.

Pipelines also win when you need auditability. In finance or healthcare, regulators demand to know how a decision was made. You can show them: “We checked for ‘fraud’ in the text, matched it against our rule list, then flagged it.” With an LLM? You can’t. It’s a black box. And that’s a compliance nightmare.

When to Just Prompt (And When You’re Asking for Trouble)

Use an LLM when you need creativity, context, or open-ended understanding.

- Writing personalized product descriptions from a short bullet list

- Summarizing 50 research papers into one executive insight

- Answering vague customer questions like, “What’s the best way to use this?”

- Translating idioms or cultural references across languages

These tasks don’t have clear rules. You can’t code them. You need a model that understands nuance. That’s where LLMs shine. A 2025 study in Nature showed LLMs pulled 87% of key relationships from materials science papers using only prompts-no training. Traditional NLP pipelines? Only 72%. The LLM saw connections humans missed.

But here’s the catch: LLMs are unreliable. Same input. Different output. That’s called non-determinism. One day it says “this product is safe.” The next day, “might cause allergic reactions.” You can’t build a legal document or a medical alert on that.

And hallucinations? Real. A 2024 analysis by GeeksforGeeks found LLMs invented facts in 15-25% of complex reasoning tasks. That’s not a bug. It’s how they’re built. They predict words. They don’t know truth.

The Hybrid Approach: What the Smartest Companies Are Doing

The best systems don’t pick one. They combine both.

Here’s how it works in practice:

- Use an NLP pipeline to clean and structure the input. Extract names, dates, entities, and keywords.

- Feed that clean data into the LLM with a precise prompt.

- Use another NLP step to validate the LLM’s output. Check for hallucinations, missing info, or contradictions.

That’s what GetStream did for their content moderation system. They let NLP handle 90% of obvious spam and abuse. The LLM only stepped in when the text was ambiguous-like sarcasm or coded language. Result? Costs dropped 85%. Accuracy went up. And response times stayed under 100ms.

Elastic’s ESRE engine does the same. It uses BM25 (a classic NLP search method) to find relevant documents first. Then it uses an LLM to rank and summarize them. The LLM doesn’t search. It refines. That cuts its workload by 40% and boosts relevance by 12%.

Reddit user u/DataEngineer2023 summed it up: “We run spaCy to pull out product names and issues, then feed that to Llama-3 to map relationships, then validate with rules. Error rate dropped 63%. Cost stayed under $500/day.” That’s the sweet spot.

Cost, Speed, and Scalability: The Hard Numbers

Let’s compare real-world costs for processing 1 million text requests:

| Metric | NLP Pipeline | LLM (GPT-3.5) |

|---|---|---|

| Cost per 1,000 tokens | $0.0003 | $0.003 |

| Speed per request | 8ms | 1,200ms |

| Accuracy on simple tasks | 90-95% | 70-80% |

| Accuracy on complex tasks | 70-75% | 85-90% |

| Hardware needed | Standard CPU | NVIDIA A100 GPU |

| Uptime reliability | 99.99% | 98.5% |

Notice something? On simple tasks, NLP wins on cost, speed, and reliability. On complex tasks, LLMs pull ahead-but only if you can afford the latency and the price tag.

What Happens When You Go All-In on LLMs?

Companies that try to replace pipelines with LLMs often regret it.

A startup in Austin tried using GPT-3.5 for customer support. They thought, “Why build rules? Just let the AI answer everything.” Within three weeks:

- Average response time: 1.8 seconds → 37% of users abandoned chat

- LLM invented product features that didn’t exist → 12 customer complaints

- Cost jumped from $150/month to $4,200/month

- They had to hire three people to manually correct outputs

They switched back to a hybrid model. Within a month, costs dropped 80%. User satisfaction went up. And they didn’t lose a single customer.

Another example: a healthcare provider used an LLM to code medical records. It was 2% more accurate than their NLP system. But it cost 100x more. And it hallucinated drug interactions. The compliance team shut it down. They kept the pipeline. They added human review. Done.

Future-Proofing Your NLP Strategy

The trend isn’t LLMs replacing pipelines. It’s pipelines making LLMs better.

Companies are now using NLP to pre-process inputs for LLMs. Instead of feeding raw customer emails to GPT-4, they first extract: who, what, when, where, why. Then they send a clean, structured prompt. Result? 65% fewer tokens used. 9% higher accuracy. Half the cost.

LLM providers are catching on too. Anthropic’s Claude 3.5 now has a “deterministic mode” that reduces output variation by 78%. But it’s slower. And it still costs more than a pipeline.

The future belongs to systems that know when to be simple and when to be smart. NLP pipelines aren’t outdated. They’re the foundation. LLMs aren’t magic. They’re tools-expensive, powerful, and risky if misused.

If you’re starting fresh, build with pipelines first. Add LLMs only where you truly need creativity or context. Never use an LLM for real-time moderation, compliance, or high-volume classification. Always validate its output. Always track costs. Always measure accuracy-not just on average, but on edge cases.

Because the best AI doesn’t try to do everything. It knows what it’s good at-and what it should leave to something simpler.

Can I use LLMs instead of NLP pipelines for everything?

No. LLMs are slow, expensive, and unreliable for simple, high-volume tasks. They’re great for open-ended reasoning but terrible for real-time filtering, classification, or compliance. Using them for everything will break your budget and your user experience.

How much does it cost to run an NLP pipeline vs an LLM?

Processing 1,000 tokens costs about $0.0003 with a pipeline and $0.003-$0.12 with an LLM. For 1 million requests, that’s $300 vs $3,000-$120,000. Pipelines run on cheap CPUs. LLMs need GPUs or cloud APIs-both cost more and add latency.

Are NLP pipelines outdated now that LLMs exist?

Not at all. NLP pipelines are faster, cheaper, and more reliable for well-defined tasks. Think of them like a scalpel-precise, efficient, and essential for surgery. LLMs are like a Swiss Army knife: versatile but overkill for simple cuts. Most successful systems use both.

What’s the biggest mistake companies make with LLMs?

Assuming LLMs are accurate or deterministic. They’re not. They hallucinate. They change answers. They cost more than expected. The biggest mistake is replacing rule-based systems with LLMs without validation layers. Always add NLP checks after LLM output.

How do I start building a hybrid system?

Start simple. Use spaCy or NLTK to extract entities and clean text. Then send that structured data to an LLM with a clear prompt. Finally, validate the LLM’s output with rule-based checks. Track cost, speed, and accuracy. Scale the LLM only where it adds real value.

Do I need to retrain LLMs constantly?

You don’t retrain them-you retrain your prompts. LLMs don’t learn from new data unless you fine-tune them, which is expensive. Instead, update your prompts, add examples, or use prompt versioning. Many companies track prompt performance and auto-test new versions before rolling them out.

poonam upadhyay

January 12, 2026 AT 01:26Shivam Mogha

January 13, 2026 AT 17:38OONAGH Ffrench

January 15, 2026 AT 11:13mani kandan

January 16, 2026 AT 13:22Rahul Borole

January 18, 2026 AT 06:40Sheetal Srivastava

January 19, 2026 AT 15:17Bhavishya Kumar

January 21, 2026 AT 11:57ujjwal fouzdar

January 22, 2026 AT 20:53Bhagyashri Zokarkar

January 24, 2026 AT 17:57Rakesh Dorwal

January 24, 2026 AT 21:33