Consent Management and User Rights in LLM-Powered Applications: What You Need to Know in 2026

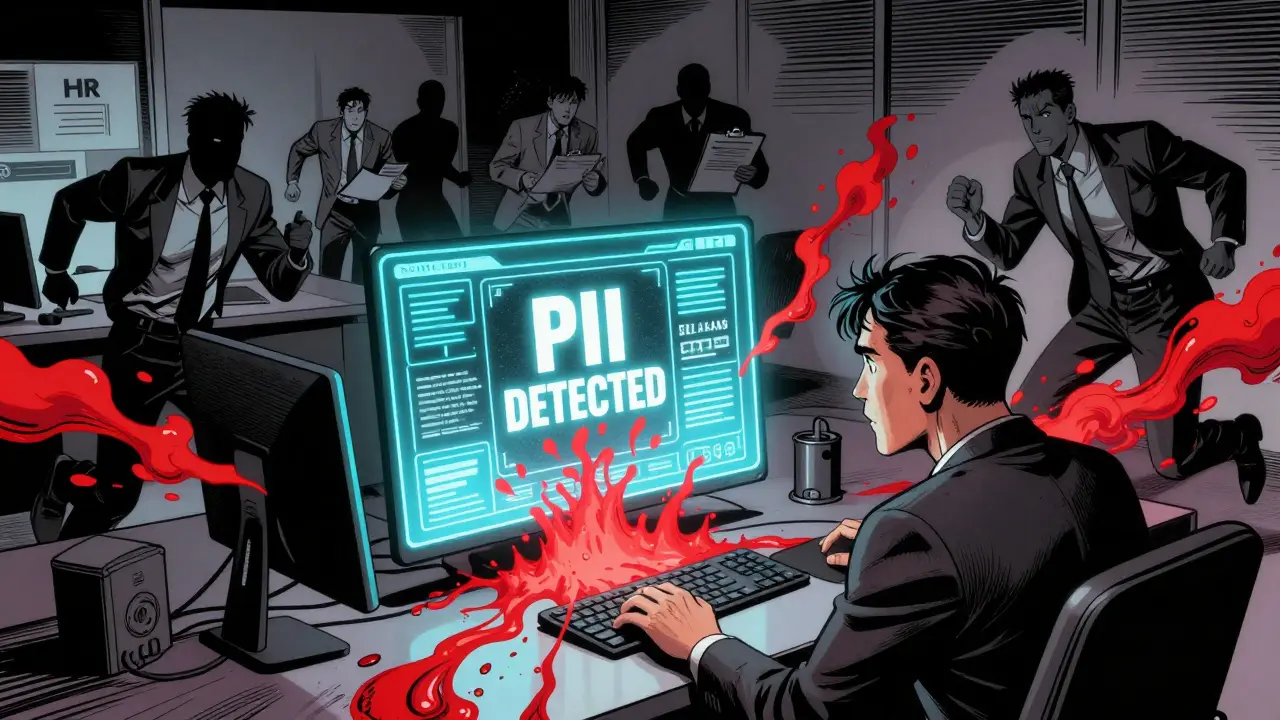

LLM-powered apps collect your data in ways cookies never did. Learn how consent management is evolving in 2026 to protect user rights - and why most systems still fail.